The Mind Behind Qwen3: An Inclusive Interview with Alibaba's Zhou Jingren

Alibaba's Tongyi Qianwen (Qwen) series continues to push the boundaries of AI innovation with the release of Qwen3, a hybrid reasoning model that dynamically balances "fast" and "slow" thinking. As the first open-source model of its kind globally, Qwen3 combines cost efficiency with state-of-the-art performance, outperforming larger competitors like DeepSeek-R1 while empowering developers with scalable solutions. In an exclusive interview with Chinese media LatePost, Zhou Jingren, CTO of Alibaba Cloud and head of the Tongyi Lab, reveals the strategic vision behind Alibaba’s AGI-driven roadmap, its early bets on multimodal AI, and why open-source is non-negotiable in the race to redefine intelligent systems.

Alibaba Releases Qwen3, a Hybrid Reasoning Model, and Interviews Alibaba Cloud CTO Zhou Jingren

Today (April 29th) early morning, Alibaba updated the Tongyi Qianwen (Qwen) series' latest generation base model Qwen3, and open-sourced 8 versions.

Qwen3 is China's first hybrid reasoning model, and also the world's first open-source hybrid reasoning model—meaning in the same model it fused "reasoning" and "non-reasoning" modes, able to, like humans, according to different problems choose "fast, slow thinking".

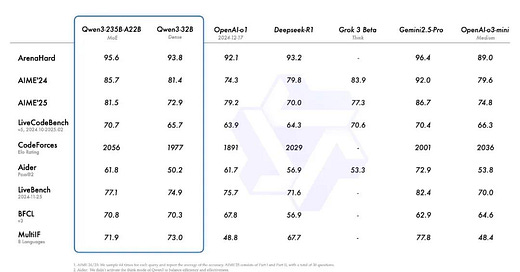

The Qwen3 flagship model, the MoE (Mixture-of-Experts) model Qwen3-235B-A22B, with 235 billion total parameters, 22 billion active parameters, surpassed the 671 billion total parameters, 37 billion active parameters DeepSeek-R1 full-power version on multiple major Benchmarks (evaluation metrics). The smaller MoE model Qwen3-30B-A3B, the active parameters when used are only 3 billion, less than 1/10th of the previous Qwen series' pure reasoning dense model QwQ-32B, but the performance is superior. Smaller parameters, better performance, means developers can use lower deployment and usage costs to get better results. Image from Tongyi Qianwen official blog. (Note: MoE models only activate a portion of parameters each time they are used, usage efficiency is higher, so there are two parameter metrics: total parameters, active parameters.)

Before Qwen3's release, we interviewed the number one person in Alibaba's large model R&D, Alibaba Cloud CTO and head of Tongyi Lab, Zhou Jingren. He is also the main decision-maker for Alibaba's open-source large models.

To date, the Qwen series large models have been downloaded a cumulative 300 million times (combining data from communities like Hugging Face, ModelScope), of which 250 million times were added in the last 7 months; the number of derivative models based on Qwen exceeds 100,000, ranking first globally.

Alibaba open-sourced AI models earlier than ChatGPT ignited this round of the AI boom. In early November 2022, Alibaba launched the open-source model sharing platform ModelScope community, open-sourcing nearly 400 models developed by DAMO Academy over its 5 years of existence all at once. In August 2023, Alibaba decided again to open-source the Qwen series large models, and has currently cumulatively open-sourced over 200 models.

From late 2022 to 2023, open-sourcing large models was not an easy choice: open-sourcing means needing to accept feedback from developers and the market, also needing to accept testing from all parties, demanding very high capabilities. Zhou Jingren and the Alibaba large model team chose to accept the test of open-sourcing.

After this, Qwen took just over 1 year to come from behind among global open-source models: in October last year, the number of Qwen's derivative models reached over 80,000, surpassing the earlier open-sourced Meta Llama series, and has continued to lead since then.

Changes in the number of derivative models for three open-source model series: Qwen series, Llama series, Mistral series.

Apple is rumored to have chosen Qwen to become its large model partner in China, Li Feifei's team used Qwen to train ultra-low-cost reasoning models, the general Agent product Manus calls Qwen to do decision planning, and some small-sized models of DeepSeek-R1 also used Qwen for training.

But compared to its influence in the tech community, Qwen is relatively low-key in the broader business world, with several key releases having their "headlines grabbed".

"What does this mean?" Zhou Jingren cast a questioning gaze, he hadn't heard this joke.

Zhou Jingren wears gold-rimmed glasses, his tone lacks fluctuation, his speed is almost constant, steady like a program. During the 3-hour interview, what he spoke most about was "technical laws".

We asked him, how do you consider the competition in the rhythm of updating and releasing models? He said:

"We cannot predict others' release rhythms. R&D, this matter, trying to adjust the rhythm temporarily won't work, it doesn't conform to the laws of R&D."

Talking about Alibaba Group CEO Wu Yongming saying in February this year "Alibaba's primary goal now is to pursue AGI", Zhou Jingren said:

"Today the outside world hears what Wu Yongming announced, feels like Alibaba suddenly had a big shift, actually it's not [like that]. From the perspective of the laws of technological development, without prior accumulation, it's impossible to achieve in one step."

If open-source cannot achieve first place, its significance will be greatly discounted, and it might also lose closed-source commercial opportunities. Zhou Jingren, however, downplayed the pressure of this choice:

"Looking from the laws of technological development, not open-sourcing actually carries greater risk, because open-source will at least catch up with closed-source, and even develop better."

He has some views different from most people, and when speaking them, his tone is also flat, without color:

"Actually o1 doesn't count as defining a new paradigm. Letting models learn to think, it's not a paradigm, but a capability."

Zhou Jingren joined Alibaba from Microsoft in 2015, led cutting-edge technology R&D at iDST and DAMO Academy, and also worked on practical business implementation at Ant and Taobao.

Below is LatePost's interview with Zhou Jingren, he reviewed Alibaba's process of developing large models, the key decisions on open-sourcing, and his thoughts on current large model technology.

Interview Q&A:

LatePost: Currently, there's a joke about Alibaba in the outside world, saying you are the Wang Feng of the large model field.

Zhou Jingren: I don't know about this. What does it mean?

LatePost: It refers to Alibaba's major releases often having their "headlines grabbed". First, the Spring Festival update of the base model Qwen2.5-Max was overshadowed by DeepSeek's reasoning model R1; later in March, the release of the reasoning model QwQ-32B coincided with the release of the very popular Agent product Manus on the same day.

Zhou Jingren: One particular day's traffic is actually not that important.

LatePost: What is truly important?

Zhou Jingren: Thinking more proactively, adhering more firmly to one's own technical path and rhythm.

LatePost: What is Alibaba's path towards AGI?

Zhou Jingren: Firstly, one of our core cognitions is that the development of large models and the support of the cloud system are inseparable. Whether training or inference, every breakthrough of large models, on the surface seems like an evolution of model capability, but behind it is actually the comprehensive coordination and upgrade of the entire cloud computing and data, engineering platform.

Regarding model capabilities, what everyone discusses most now are reasoning models. We are continuing to explore letting models think more like humans, in the future even being able to self-reflect, self-correct, etc.

Multimodality is also an important path towards AGI. The human brain also has parts that process text, parts that process vision, sound. We want to enable large models to understand and connect various modalities.

We are also exploring new learning mechanisms, including how to enable models to learn online, continuously learn, and self-learn. (Note: Current model training is "offline learning", each upgrade requires redoing pre-training, updating the version.)

In enhancing the performance and efficiency of the cloud system, we will strengthen the software-hardware integrated joint optimization of cloud and models. Especially this year, engineering capability, the performance and efficiency of the entire cloud system combined with AI will become a core competitiveness.

LatePost: Doing so many things simultaneously, won't it lose focus?

Zhou Jingren: Large model development up to now, has already moved from the initial phase of the early stage into the middle phase of the early stage, it's impossible to only improve on single-point capabilities anymore. Because true artificial general intelligence requires multiple capabilities like multimodality, tool use, Agent support, and continuous learning; waiting for (a certain direction) to emerge before acting is too late. Not just us, leading companies will all conduct preliminary research in multiple directions in advance.

LatePost: The just-released Qwen3 reflects Alibaba's layout in which directions?

Zhou Jingren: Qwen3 is a hybrid reasoning model, it simultaneously provides "reasoning mode" and "non-reasoning mode", the former is used for complex logical reasoning, math, and programming, the latter can handle daily instructions and efficient dialogue. Hybrid reasoning models will be an important trend in large model development going forward.

LatePost: Why will it become a trend? What are the benefits of hybrid reasoning models?

Zhou Jingren: It can better balance performance and cost. In "reasoning mode", the model will execute intermediate steps like decomposing problems, step-by-step deduction, verification, etc., giving a "well-considered" answer; in "non-reasoning mode", it can quickly follow instructions to generate answers.

Qwen3 also has a "thinking budget" setting—developers can set the maximum token consumption for deep thinking themselves, which can better meet the different needs of developers regarding performance and cost.

LatePost: Then what are the costs and difficulties of doing this?

Zhou Jingren: Hybrid reasoning models are achieved through mixed training of reasoning and non-reasoning, this requires the model to learn two different output distributions, which really tests the training strategy. So designing and training hybrid reasoning models is far more difficult than making purely reasoning models.

In the post-training phase of Qwen3, it's also trained with a mix of two modes, equivalent to merging the reasoning model QwQ series and the instruction fine-tuning model Qwen2.5-instruct series, while combining their advantages into one.

LatePost: How does Alibaba grasp the model update rhythm now? Release rhythm and volume are also points of competition among companies now, for example, OpenAI had several updates rushed before Google's major releases.

Zhou Jingren: There will definitely be considerations in this aspect, but ultimately it doesn't depend on how well it's promoted at release, ultimately it still depends on developer and market feedback.

Besides, we also cannot predict others' release rhythms. R&D, this matter, trying to adjust the rhythm temporarily won't work, it doesn't conform to the laws of R&D.

LatePost: The download volume of the Qwen series is second only to Llama globally, and it's the open-source model with the most derivative models, but market awareness of this is insufficient, does this trouble Alibaba? What methods have you thought of to increase technical influence?

Zhou Jingren: Actually, the dissemination of Tongyi Qianwen is quite good. The core still lies in the open-sourced models being strong enough, otherwise other methods are useless.

LatePost: When will Tongyi Qianwen have a truly breakout, eye-opening progress for more people? Similar to the attention caused by Sora, DeepSeek-R1.

Zhou Jingren: Tongyi Qianwen will have many highlights next, but whether it can make everyone eye-opening, that depends on everyone. I feel sometimes we pay too much attention to the present moment, who is slightly stronger than whom. But in the long run, if one truly believes AGI is the final destination, the current chasing and catching up are just phased processes.

What's more important is knowing you are on the right path, and needing to innovate continuously and long-term. So don't get too hung up on whether today or tomorrow, you are one step ahead of others again.

LatePost: Alibaba management, for example Wu Yongming, doesn't he care whether Tongyi Qianwen is superior to others at this very moment?

Zhou Jingren: We definitely must maintain [our position] in the first tier. We also pursue that with each generation model release, there are technological breakthroughs, capable of representing the current highest level in the field.

At the same time, we have also emphasized internally many times, this is not a short-term competition, it's not about how tight you pull the string for a moment, but about long-term innovation. The entire technological development requires determination, and also hope to give the industry some time and patience.

Interview Section Heading 2: "Without prior accumulation, one cannot talk about being AI-centric at this moment"

Interview Q&A:

LatePost: At the Alibaba earnings call in February this year, Alibaba Group and Alibaba Cloud CEO Wu Yongming said "Alibaba's primary goal now is to pursue AGI, continuously developing large models that expand the boundaries of intelligence". As the head of Alibaba's large models, when did you clearly define the biggest goal as AGI?

Zhou Jingren: Today the outside world hears what Wu Yongming announced, feels like Alibaba suddenly had a big shift, actually it's not like that. From the perspective of the laws of technological development, without prior accumulation, it's impossible to achieve in one step.

Alibaba invested in AI very early, before Transformer, there was iDST (Alibaba Institute of Data Science & Technologies, founded 2014) and DAMO Academy (founded 2017) doing cutting-edge AI research. After Transformer, we started making multimodal MoE (Mixture-of-Experts) models from 2019, and in 2021 released the trillion-parameter MoE multimodal large model M6.

The core of Transformer lies in pre-training. It doesn't start with a specific special task, but trains a model capable of adapting to multiple tasks through large amounts of data.

This is a major breakthrough in machine learning. Because previous models needed to select data, do labeling for a specific problem, the model capability was not easily transferable, often requiring one model per scenario. For example, face recognition is not easily transferable to object recognition. We recognized the generalization potential of pre-training, so we invested in this direction relatively early.

LatePost: Initially Google proposed Transformer for handling NLP (Natural Language Processing) problems, later OpenAI also achieved breakthroughs first in language with GPT. But Alibaba first focused on multimodal large models, why this choice?

Zhou Jingren: At that time, I was also responsible for Taobao's search and recommendation. Back then, we realized that understanding a product is not just about understanding images or text descriptions, user reviews, and various click, browse data. A more precise understanding should be a multimodal, comprehensive understanding.

LatePost: So at that time it was more about serving e-commerce scenarios, not yet expanding the boundaries of intelligence?

Zhou Jingren: E-commerce was one of the target scenarios. On the other hand, looking from the evolutionary logic of AGI, multimodality is also indispensable; for AI to be able to use tools, even to act in the real physical world in the future, requires multimodal capabilities.

This example also well illustrates that Alibaba has been a technology company from very early on. Taobao's success is not just about selling goods online; the high concurrency of Double Eleven, more precise product recommendations all require substantial technical support.

In 2009, Alibaba also started doing cloud computing, in 2014 it started building a series of AI platforms, data platforms based on the cloud, such as MaxCompute, etc.

Without this prior accumulation, one cannot talk about being AI-centric at this moment.

LatePost: From starting pre-trained models in 2019 to the large model boom in 2023, what changes did Alibaba's cognition and investment in large models undergo?

Zhou Jingren: In the autumn of 2022, before ChatGPT's release, Alibaba Cloud was the earliest in the industry to propose MaaS (Model as a Service). At that time, large models weren't popular yet, so it didn't resonate much with people.

But at that time we already saw that models are important production elements of the new era. In the previous generation of cloud computing, the IaaS (Infrastructure as a Service) layer consisted of computing elements like compute, storage, network, etc., above that was the PaaS (Platform as a Service) layer with production elements like data platforms, machine learning platforms, etc. Models, however, fuse data and computation, representing a higher-order product. There is a path here from IaaS to PaaS to MaaS.

Another important action is open-sourcing. We started open-sourcing very early too, tracing back to launching the open-source model sharing community "ModelScope" in 2022, and later deciding to open-source the Tongyi Qianwen large model series in August 2023. Not many people paid attention then, but today everyone sees the value of open-source more clearly.

LatePost: Is open-sourcing a competitive strategy for Alibaba? For example, your former Microsoft colleague Harry Shum once said: The first place is always closed-source, the second place opens source.

Zhou Jingren: There are many successful examples of open-source, for instance, in the previous generation big data systems, open-source Spark, Flink became mainstream.

We open-source large models based on two judgments: first, models will become core production elements, open-sourcing is more conducive to its popularization, able to drive the rapid development of the entire industry; second, open-source has become an important innovation driver for large models.

This innovation comes from multiple aspects: first, open-source allows more outstanding talents globally to participate in technological innovation, pushing technological development together; meanwhile, open-source lowers the barrier for enterprises to use models, and because open-source is free, enterprises can try business integration without hesitation, this also encourages more developers within enterprises to participate in open-source construction. So the technical innovation of community developers and feedback from enterprises will both help build the technical ecosystem and promote technological evolution.

This is the consistent logic behind Alibaba creating the ModelScope community and open-sourcing Tongyi Qianwen, not a decision made off the cuff at some point in time. Initially, the outside world didn't quite understand it, ModelScope was obscure when it first launched, but today it has become China's largest model community.

LatePost: What if open-sourcing isn't done well, and instead loses closed-source commercial opportunities? When discussing open-sourcing back then, what discussions and concerns were there among Alibaba's senior management?

Zhou Jingren: You might want to hear some stories of heated discussions, but actually there weren't any.

Alibaba's vision is "to make it easy to do business anywhere"; the original intention of Cloud is to enable enterprises to efficiently achieve technological and business innovation on the cloud; open-sourcing large models is hoping enterprises can more easily use large models in their business. So these 3 visions are highly consistent and inherited in the same line.

At the same time, looking from the laws of technological development, not open-sourcing actually carries greater risk, because open-source technology will at least catch up with closed-source, and often develops faster and stronger, Android, Spark are examples.

LatePost: When did you feel the Tongyi Qianwen open-source ecosystem had successfully taken off?

Zhou Jingren: First is looking at developers' choices, last year our share of downloads on Hugging Face was over 30%, the number of derivative models based on Tongyi Qianwen is also the highest, already exceeding 100,000, this only tracks the number reported back to the developer community, the actual number is higher than this.

Second is looking at performance metrics, for example, our previously released QwQ-32B model surpassed R1 on the LiveBench created by LeCun, it's the best open-source reasoning model, and this isn't even the full-power version of QwQ. (Note: LiveBench evaluates models on multiple complex dimensions including math, reasoning, programming, language understanding, instruction following, and data analysis; compiled under the leadership of Turing Award winner, Meta AI Chief Scientist Yann LeCun.)

Tongyi Qianwen is in fact the world's best, most complete, and most widely used open-source model, the market consensus on this matter is quite unified.

LatePost: The Alibaba Tongyi Qianwen series was open-sourced later than Meta Llama, but surpassed it. What was Llama's mistake, or perhaps what did you do right?

Zhou Jingren: We pay great attention to developer needs, open-sourcing isn't just about releasing the code or model weights and being done, it's about truly enabling developers to use it.

So each time we open-source, from what angle to open, which sizes to set, we must comprehensively weigh the different needs of different developers in terms of cost and capability. For example, recently Tongyi Wanxiang open-sourced a version that can run on consumer-grade graphics cards, precisely to cover a broader range of developers, because many people might not have huge servers. True open-source is making it convenient for everyone to use and contribute actively.

LatePost: This time Qwen3 open-sourced 8 versions in total, 6 of which are dense models, with parameters from 0.6B to 32B, 2 are MoE (Mixture-of-Experts) models, one 30B (3B active), one 235B (22B active). Why this combination of sizes?

Zhou Jingren: Actually, it's just trying to meet the needs of different developers, from individuals to enterprises, as much as possible. For example, 4B can be used on the mobile device side, 8B is recommended for PC or automotive side, 32B is the size most favored by enterprises, suitable for commercial large-scale deployment. MoE models only need to activate very few parameters to achieve strong performance, offering better cost-effectiveness.

LatePost: This time, are there new supporting tools open-sourced at the Infra layer to help developers use Qwen better?

Zhou Jingren: Qwen3 supports the two mainstream inference optimization open-source frameworks, vLLM and SGLang, right from the start. Qwen3 also natively supports MCP (Model Context Protocol, developed by Anthropic). Combined with our Qwen-Agent framework open-sourced in January this year, Agent developers can integrate tools via MCP or other methods to quickly develop agents.

LatePost: I'd like to verify a situation, there are reports saying that after DeepSeek-R1 was released, over 20% of Tongyi Qianwen model developers switched to using DeepSeek models. How big of a challenge is this migration for you? Can Qwen3 reverse this phenomenon?

Zhou Jingren: We haven't observed significant migration. Besides, it's normal for developers to try different models, the open-source community inherently cannot be exclusive, everyone makes choices based on their own needs. We believe that as long as Tongyi Qianwen's model capabilities continue to improve, developers will be willing to come over.

LatePost: Within the industry, it's thought that o1 and R1 opened a new paradigm. How do you view their value?

Zhou Jingren: Actually, o1 doesn't really count as defining a new paradigm. Letting models learn to think, it's not a paradigm, but a capability. Just like multimodality isn't a paradigm either, these are all normal model evolutions.

Many things in o1, like CoT (Chain-of-Thought), Reinforcement Learning (RL), existed very early on. You could even say everything is RL, including every model iteration, which involves adding feedback from the previous version when training the new version.

Paradigm is a very heavy word. In the past, what could truly be called a paradigm shift, I believe, is the entire method of training base models.

LatePost: What do you think are the relatively certain advancements in the large model field this year?

Zhou Jingren: There are two main lines: one is in model capabilities, there will continue to be improvements in human-like thinking and multimodality; second, models and the underlying cloud computing systems will become more deeply integrated, simultaneously enhancing training and inference efficiency, making models easier to use and more widespread.

LatePost: Will there be any bottlenecks in this process? Although using reinforcement learning for reasoning models is considered to have great potential, its foundation is a good pre-trained model, for example, Alibaba's reasoning model QwQ-32B is based on Qwen2.5-32B, R1 is based on DeepSeek-v3. And recently, X.ai's released pre-trained model Grok 3, using 200,000 cards only brought a 1.2% improvement (increase in total score over the previous first place on Chatbot Arena). When the Scaling Laws of pre-training slow down, how long can the improvement of reasoning models based on this foundation continue?

Zhou Jingren: Reasoning models indeed all rely on powerful base models, this is a consensus. But one cannot simply say the Scaling Laws of pre-training itself have reached their limit.

If only looking at text, the upper limit of data can be seen, but multimodal data, like large amounts of visual data, haven't been used yet. At the same time, the boundaries between pre-training, post-training, and even inference are blurring, integrating these stages might also bring improvements. In terms of learning methods, besides offline training, everyone is also exploring online learning, continuous learning, etc.

Therefore, whether looking at data, training methods, or learning mechanisms, the capability of base models still has room for improvement.

Interview Section Heading 3: "Cloud and large models have the same priority, must advance side by side"

Interview Q&A:

LatePost: You are now both Alibaba Cloud CTO and head of Tongyi Lab, needing to ensure both Alibaba Cloud and the Tongyi Qianwen large model remain in leading positions, which of the two matters has higher priority?

Zhou Jingren: The priority is the same, because the two cannot be separated, models are an important part of cloud services; for models to have high cost-effectiveness, they need the support of the cloud system. Both aspects must advance side by side.

LatePost: If the Tongyi Qianwen large model cannot continuously maintain its lead, will it also adversely affect the Alibaba Cloud business, which is currently leading?

Zhou Jingren: This is a two-way influence. If the model isn't strong, the cloud's intelligent services will be discounted; whereas if the model is strong enough but cloud capabilities can't keep up, it's impossible to provide high cost-effective services.

In the AI era, what customers want is not a single model or cloud capability, but a comprehensive experience of strong model + low cost + high elasticity.

Reflected technically, in the past training and inference were considered separately, but now training must consider inference efficiency, and during inference one must also consider if the model is easy to train, whether it can converge. This is two sides of the same coin, requiring overall collaborative optimization.

LatePost: DeepSeek performed extreme Infra optimization for model training and inference based on its own GPU cluster. Could it potentially become a third-party AI cloud player?

Zhou Jingren: It looks like it has compute power, an Infra layer, and models, but it doesn't constitute a complete cloud service.

Cloud services must possess extreme elasticity and guarantee various SLAs (Service Level Agreements)—for example, some calls require extremely low latency, other calls need higher throughput and lower costs, additionally stability and security are needed—this is true enterprise-grade cloud service. It can't just go down during use or suddenly have high latency, enterprises cannot put important business on it like that.

LatePost: When you saw the final summary of DeepSeek Infra open-source week, stating their cost profit margin for providing API services based on their own compute power reached 545% (translating to a gross margin of 85%), what was your feeling?

Zhou Jingren: Their system optimization is indeed very outstanding, but this is an idealized calculation method. Don't treat it with the logic of cloud, because when truly providing cloud services, one cannot selectively serve customers during peak system periods, or reduce service quality during peak periods.

A complete MaaS service will also not have only one model, but be compatible with multiple models, which is why Alibaba Cloud supported DeepSeek from the beginning. The logic of cloud is to optimize performance well for different models, leaving the choice to the customer.

LatePost: In the new AI cloud opportunity, who are Alibaba Cloud's actual competitors?

Zhou Jingren: Domestically, we are the leader, internationally there are many competitors, AWS, Azure, GCP (Google Cloud services) are all worth learning from.

LatePost: Isn't ByteDance's Volcano Engine, which is aggressively investing in AI cloud, [a competitor]?

Zhou Jingren: Volcano has developed rapidly in recent years, we welcome everyone to jointly promote the development of the AI industry. This market space is still very large.

LatePost: Alibaba recently announced a 380 billion [Yuan?] investment plan in AI and cloud computing infrastructure. However, there's a cycle from investing in the foundational layer to application prosperity, are you worried that applications might not have such a large demand for AI cloud by then?

Zhou Jingren: The exponential growth trend of AI applications is very clear, over the past year, the growth rate of Alibaba Cloud's MaaS service has been very fast, even reaching a state where supply cannot meet demand.

LatePost: Besides chip quantity, computational efficiency, etc., what other overlooked aspects do you think exist in AI foundational layer investment?

Zhou Jingren: Power supply will be a challenge in the future. So energy-aware optimization will also become a technical direction, which is finding ways to reduce energy consumption per token.

LatePost: Does Alibaba already have any preparations?

Zhou Jingren: For example, when building intelligent computing centers, besides comprehensively considering construction costs and service latency based on user business needs, we also consider nearby natural energy supply, climate conditions, etc. These are all part of the cloud foundational system, the Know-How we've accumulated over so many years becomes increasingly important in the AI era.

Interview Section Heading 4: "There are no shortcuts in scientific and technological R&D"

Interview Q&A:

LatePost: The common choice for leading large companies facing the AI opportunity is to cover everything from compute to the model layer, exploring technical directions from language, reasoning to multimodality, to more cutting-edge autonomous learning, etc. But some believe a more focused approach like DeepSeek's can better clarify the team's focus—for example, DeepSeek previously also worked on multimodality, but in the latter half of last year, narrowed down more to language and reasoning. Compared to this more focused approach, how does Alibaba solve internal resource allocation problems during its multi-directional layout process?

Zhou Jingren: Technological innovation itself requires multiple attempts, but it's not about senselessly trying everything. We first conduct small-scale experiments to verify if the direction is correct, then see whether to increase investment.

The R&D process itself is a pipeline, some directions are in preliminary research, some are wrapping up, there are different rhythms. Today's AI R&D is a complete system from efficient experimentation to training and finally to output.

LatePost: Among the many pipelines, what signals indicate that a direction has great potential?

Zhou Jingren: You might want to hear a big secret, but actually there are no tricks or shortcuts. Generally, it starts with a hypothesis, then small-scale experiments, obtaining preliminary evidence, followed by larger-scale experiments. We internally have scientific evaluation methods and data support to help good directions stand out. This is a common characteristic of organizations that can continuously achieve results.

LatePost: How can one have high-quality hypotheses? How to improve the efficiency of conducting multiple experiments simultaneously?

Zhou Jingren: One is directional judgment, which comes from the technical quality of outstanding talent; second is scientific verification methods, relying on experimental and data support, not on one person's whim.

LatePost: Suppose I am an Alibaba Tongyi researcher, I have an idea that requires using 100 cards for an experiment, how can I timely and smoothly obtain the desired resources?

Zhou Jingren: We have platforms for rapid experimentation, supporting trial-and-error with small amounts of resources. Wanting to hit the jackpot on ultra-large-scale models right from the start, that's impossible.

LatePost: Among your numerous pipelines, which ones have recently shown significant progress?

Zhou Jingren: Recently, everyone is most focused on language and reasoning. We have accumulated a lot in directions like multimodal VL (Visual Language models), audio models, etc., all showing significant improvements on Qwen3.

LatePost: The source of R&D is talent, some of Alibaba's key technical personnel have been hired by companies like ByteDance with high salaries, how do you respond?

Zhou Jingren: Talent mobility is very normal. What's more important is that the team can adhere to an original aspiration, work together to produce outstanding work, this is the source of cohesion.

Meanwhile, model R&D now is not just innovation at the model, algorithm layer, but a long-term systems engineering project, requiring the patience for steadfast investment; doing it today, stopping tomorrow, has a huge impact on the team. Alibaba's investment in Tongyi Qianwen is very firm.

LatePost: We learned that in 2024, Tongyi gave all researchers a general increase of one job level and comprehensive salary raises. Is this a response to changes in the talent market?

Zhou Jingren: We have always incentivized the team. Salary matching is necessary, but high salary is not the only means.

LatePost: You told us in 2023 that in the AI era, research, technology, and product need to be more closely integrated, unable to be separated as much as before. Why did Alibaba move the large model's 2C products from Alibaba Cloud to the Alibaba Information Intelligence Business Group managed by Wu Jia in the second half of last year?

Zhou Jingren: This isn't separation, but rather greater specialization. Tongyi focuses on technology R&D, the 2C product team focuses on user experience and operations, the division of labor is clearer, but collaboration is very close. For example, Quark is also using the latest Tongyi Qianwen models.

LatePost: How do you coordinate with Wu Jia now?

Zhou Jingren: We discuss frequently on a daily basis. One of Tongyi's important goals is to support products like Quark well, these products can also give us some feedback for model R&D.

LatePost: Why does Tongyi need to make the Qwen Chat dialogue product itself?

Zhou Jingren: Qwen Chat won't have too much product design, it's more about letting global developers conveniently experience the latest models of Tongyi Qianwen.

LatePost: You are very calm, since the AI boom, has anything made you unusually excited?

Zhou Jingren: One is the rapid development of technology itself, second is that our past persistence allows us to have good progress. This is a very fortunate thing for every technology worker.

LatePost: Then is there anything that makes you anxious? For example, regarding your strength in multimodality, were you anxious when you first saw Sora?

Zhou Jingren: No. Why be anxious? When ChatGPT first came out, the industry was very anxious, later domestic models started competing, becoming more rational. Sora is the same, comparing our recently open-sourced video generation model Wanxiang 2.1 with Sora, external evaluations also show pros and cons for both sides.

LatePost: The premise of not being anxious is that you remain in the first tier, how does Alibaba know it has always been in the first tier of large models?

Zhou Jingren: Still look at market feedback. Saying how strong you are yourself doesn't mean much.

LatePost: Are there any methods to stay in the first tier long-term? Right now everyone seems to lead the trend for just 30 days.

Zhou Jingren: Looking from within each organization, the improvement of each model generation today is based on the capabilities of the previous generation, advantages are gradually accumulated, superimposed generation after generation.