Featured Story

Alibaba Launches Robotics and Embodied AI

Alibaba Group has set up a dedicated Robotics and Embodied AI team, signaling its entry into the fast-growing race among global tech giants to bring artificial intelligence into the physical world.

News

Xiaomi Responds to Incident of Car Reportedly Driving Off on Its Own

AI

Afari Technology Unveils AI Plan and New Brand

Industry

ByteDance’s Doubao Translation Model Supports 28 Languages, Performance Comparable to GPT-4o

ByteDance debuts Doubao translation model: two-way across 28 languages, performance comparable to GPT-4o, with fluent output and 4K context.

WAIC2025

Zhipu AI Launches GLM‑4.5, an Open-Source 355B AI Model Aimed at AI Agents

Chinese startup Zhipu AI (now rebranded as Z.ai) has open-sourced its new flagship model GLM‑4.5, a 355-billion-parameter foundation AI model. The July 28 ann...

WAIC2025

China Proposes “World AI Cooperation Organization” at WAIC 2025

Shanghai, July 28, 2025 – At the 2025 World Artificial Intelligence Conference (WAIC) in Shanghai, the Chinese government announced an initiative to establish a...

Latest News

China's Space Station Equips First Oven, Enabling On-Orbit Baking

BEIJING – Astronauts aboard China's space station have, for the first time, used an onboard oven to bake food in orbit, marking a significant upgrade to the "sp...

Starbucks Values China Business at $13 Billion in Major Partnership Deal

SHANGHAI – Starbucks Coffee Company announced today a strategic partnership with Boyu Capital, a leading Chinese alternative asset management firm, to form a jo...

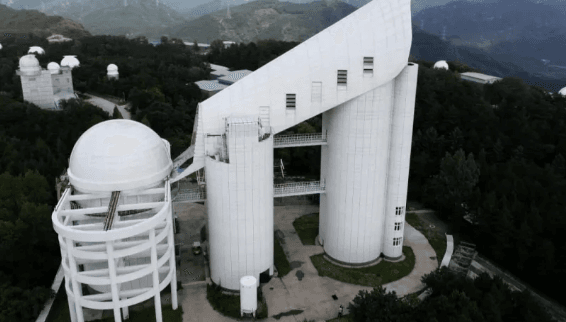

LAMOST Releases Record 28 Million Spectra, Cementing Global Lead in Astronomical Data

BEIJING, Nov. 4 - China's Large Sky Area Multi-Object Fiber Spectroscopic Telescope (LAMOST) has reinforced its position as the world's leading spectral survey ...

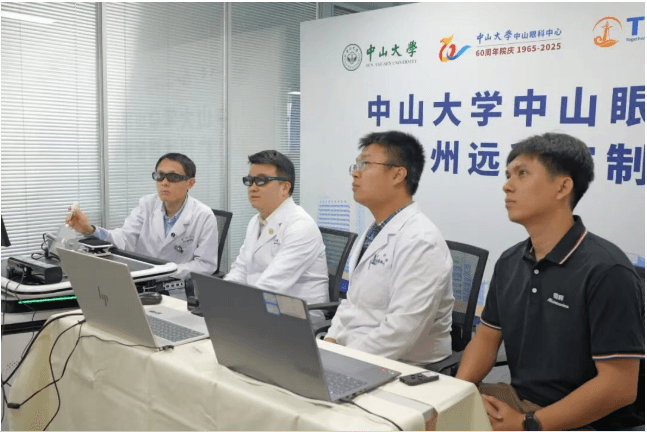

World's First Remote Robotic Subretinal Injection Performed in China

GUANGZHOU, China – A multidisciplinary team led by Zhongshan Ophthalmic Center at Sun Yat-sen University has successfully performed the world's first remote rob...

Harmony Intelligent Mobility Alliance Sets Two New Records in October

Harmony Intelligent Mobility Alliance announced that in October 2025, the company achieved a record-breaking monthly delivery of 68,216 vehicles, marking the highest single-month delivery volume in its history.

AI Expert Zhou Shuchang Joins XPENG Motors as Senior Director of Autonomous Driving Algorithms

AI expert Dr. Zhou Shuchang, a founding team member of StepFun, has officially joined XPENG Motors as Senior Director of Autonomous Driving Algorithms, reporting directly to Liu Xianming, Head of Autonomous Driving at XPENG. AI expert Dr. Zhou Shuchang, a founding team member of StepFun, has officially joined XPENG Motors as Senior Director of Autonomous Driving Algorithms, reporting directly to Liu Xianming, Head of Autonomous Driving at XPENG.

Nexperia China Issues Statement on Wafer Supply Disruption

In a statement released on November 2nd, Nexperia Semiconductor China Co., Ltd. informed customers that “Nexperia Netherlands has unilaterally decided to stop supplying wafers to the Dongguan-based assembly and testing facility (ATGD) starting October 26, 2025.”

NIO CEO William Li Responds to “When Will NIO Collapse?” Question: “We Must Be Profitable in Q4”

NIO CEO William Li addressed growing doubts about the company’s future, insisting that achieving profitability in Q4 is non-negotiable. Li reaffirmed confidence in NIO’s operations and EV market prospects as the automaker reports record deliveries and narrowing losses.

Featured News

Stay Updated

Get the latest China tech news delivered to your inbox