XPeng and Peking University Paper Accepted to AAAI 2026, Proposing Efficient On-Vehicle Large Model Deployment

Want to read in a language you're more familiar with?

XPeng and Peking University introduced FastDriveVLA, an efficient token-pruning framework that slashes computational load for autonomous driving AI models by 7.5x without sacrificing performance, enabling smarter real-time decisions on vehicle hardware.

A joint paper by XPeng Motors and the National Key Laboratory of Multimedia Information Processing at Peking University's School of Computer Science has been accepted to AAAI 2026, one of the world's top AI conferences. The acceptance rate for this year's conference stands at just 17.6%.

Titled "FastDriveVLA: Efficient End-to-End Driving via Plug-and-Play Reconstruction-based Token Pruning," the paper introduces FastDriveVLA, an efficient visual token pruning framework designed specifically for end-to-end vision-language-action (VLA) models in autonomous driving.

As VLA models scale, the massive number of visual tokens creates significant computational burdens on vehicle-side hardware. FastDriveVLA addresses this through a plug-and-play pruner called ReconPruner, which uses a foreground–background adversarial reconstruction strategy. This approach trains the model to focus on critical foreground elements—such as pedestrians, vehicles, and traffic signs—while filtering out irrelevant visual details, mirroring how human drivers prioritize attention.

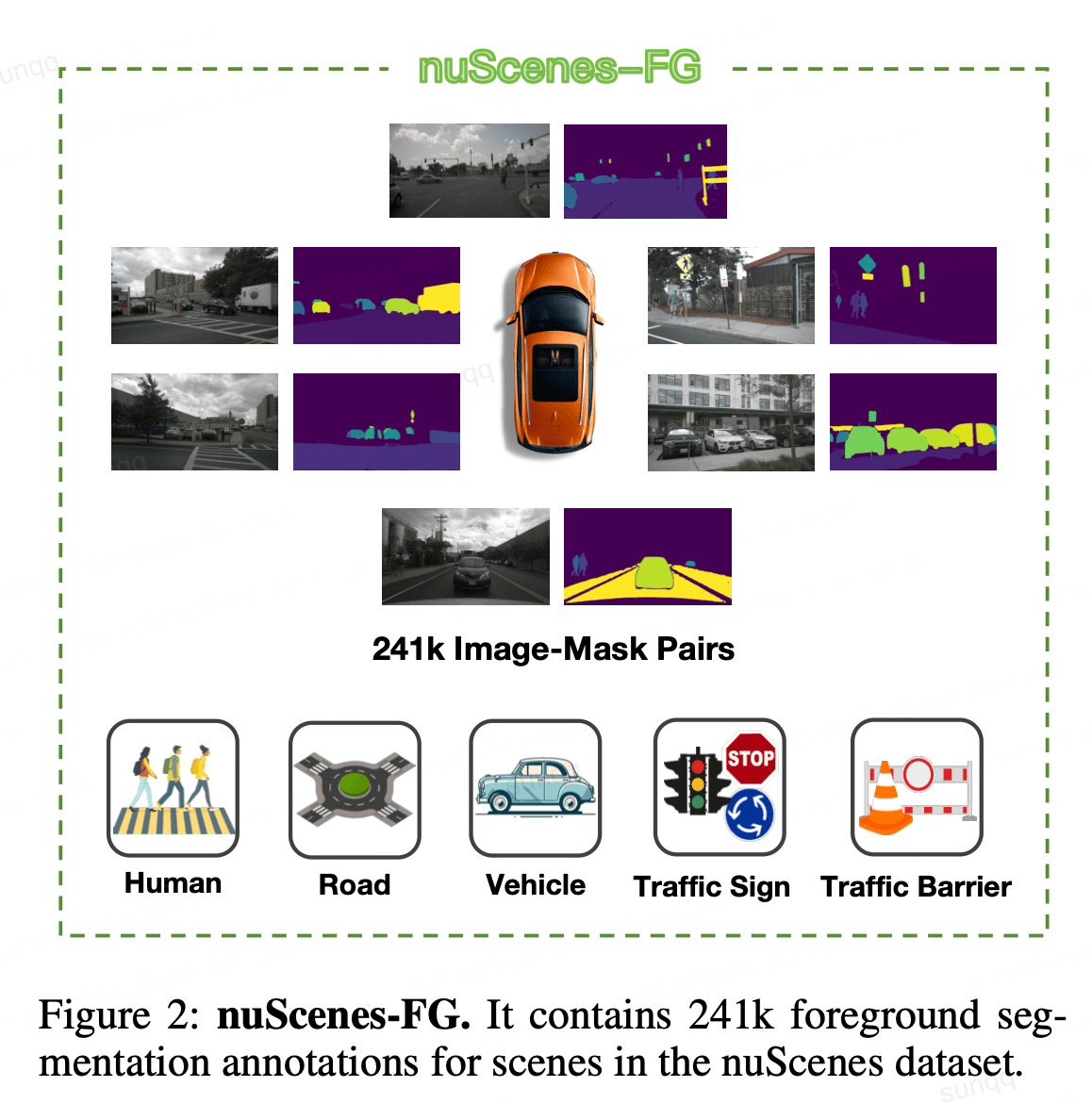

To support training, the team built nuScenes-FG, a large-scale foreground segmentation dataset containing 241,000 image–mask pairs. Experimental results on the nuScenes benchmark show that pruning 25% of visual tokens results in almost no performance loss, while 50% pruning maintains balanced performance across metrics. Reducing tokens from 3,249 to 812 cuts computation by nearly 7.5× and significantly lowers inference latency.

This marks another major presence for XPeng at a top-tier AI conference this year, following its earlier presentation at CVPR WAD. The research sets a new benchmark for efficient deployment of large models in real-world, vehicle-side environments.

Source:XPeng